Today we release our first self-hosted Auphonic Speech Recognition Engine

using the open-source Whisper model

by OpenAI!

With Whisper, you can now integrate automatic speech recognition in 99 languages into your

Auphonic audio post-production workflow,

without creating an external account

and without extra costs!

Whisper Speech Recognition in Auphonic

So far, Auphonic users had to choose one of our integrated external service providers (Wit.ai, Google Cloud Speech, Amazon Transcribe, Speechmatics) for speech recognition, so audio files were transferred to an external server, using external computing powers, that users had to pay for in their external accounts.

The new Auphonic Speech Recognition is using Whisper,

which was published by OpenAI as an open-source project.

Open-source means, the publicly shared GitHub repository

contains a complete Whisper package including source code, examples, and research results.

However, automatic speech recognition is a very time and hardware-consuming process, that can be incredibly

slow using a standard home computer without special GPUs.

So we decided to integrate this service and offer you automatic speech recognition (ASR) by Whisper processed on our

own hardware, just like any other Auphonic processing task, giving you quite some benefits:

- No external account is needed anymore to run ASR in Auphonic.

- Your data doesn't leave our Auphonic servers for ASR processing.

- No extra costs for external ASR services.

- Additional Auphonic pre- and post-processing for more accurate ASR, especially for Multitrack Productions.

- The quality of Whisper ASR is absolutely comparable to the “best” services in our comparison table.

How to use Whisper?

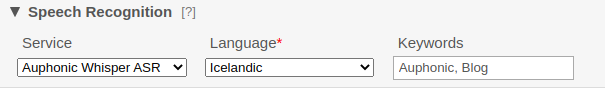

To use the Auphonic Whisper integration, you just have to create a production

or preset as you are used to and

select “Auphonic Whisper ASR” as “Service” in the section

Speech Recognition.

To use the Auphonic Whisper integration, you just have to create a production

or preset as you are used to and

select “Auphonic Whisper ASR” as “Service” in the section

Speech Recognition.

This option will automatically appear for Beta and paying users. If you are a free user but want to

try Whisper: please just ask for access!

When your Auphonic speech recognition is done, you can download your transcript in different

formats and may edit or share your transcript with the

Auphonic Transcript Editor.

For more details about all our integrated speech recognition services, please visit our

Speech Recognition Help and watch this

channel for Whisper updates – soon to come.

Why Beta?

We decided to launch Whisper for Beta and paying users only, as Whisper was just published end of

September and there was not enough time to test every single use case sufficiently.

Another issue is the required computing power:

for suitable scaling of the GPU infrastructure, we need a beta phase to test the service

while we are monitoring the hardware usage, to make sure there are no server overloads.

Conclusion

Automatic speech recognition services are evolving very quickly, and we've seen major improvements over the past few

years.

With Whisper, we can now perform speech recognition without extra costs on our own GPU hardware,

no external services are required anymore.

Auphonic Whisper ASR is available for Beta and paying users now, free users can

ask for Beta access.

You are very welcome to send us feedback (directly in the production interface or via

email),

whether you notice something that works particularly well or discover any problems.

Your feedback is a great help to improve the system!