After an initial private beta phase, we are happy to open the Auphonic automatic speech recognition integration to all of our users!

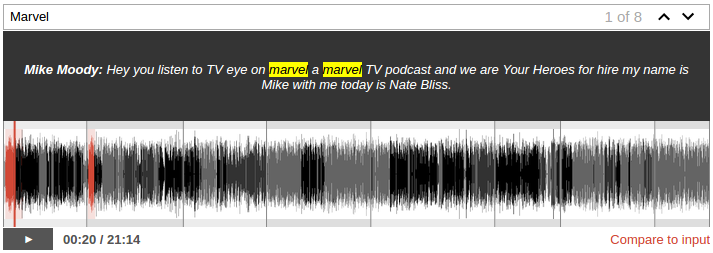

Our WebVTT-based audio player with search in speech recognition transcripts and exact speaker names.

Our WebVTT-based audio player with search in speech recognition transcripts and exact speaker names.

We built a layer on top of multiple engines to offer affordable speech recognition in over 80 languages. This blog post also includes 3 complete examples in English and German.

Search within Audio and Video

One of the main problems of podcasts, audio and video is search.

Speech recognition is an important step to make audio searchable:

Although automatically generated transcripts won't be perfect and might be difficult to read

(spoken text is very different from written text),

they are very valuable if you try to find a specific topic within a one hour audio file

or the exact time of a quote in an audio archive.

Audio players can easily integrate transcripts so that it's possible to search and seek within audio (see screenshot above or examples below) and content management systems let you search within all episodes of a podcast.

Furthermore, it enables search engines to index audio/video files (audio SEO anyone?):

At the moment there are already a few podcast-specific search engines

which take data from feeds, audio metadata, chapters and transcripts

(e.g. audiosear.ch by pop up archive, fyyd.de).

With the introduction of WebVTT,

a web standard for captions and subtitles for video/audio,

we have a common and well defined format which also general purpose search engines (Google)

can use to index audio and video.

Speech Recognition Integration in Auphonic

Auphonic has built a layer on top of a few external

speech recognition services:

Our classifiers generate metadata during the analysis of an audio signal

(music segments, silence, multiple speakers, etc.) to divide the audio file into

small and meaningful segments, which are sent to the speech recognition engine afterwards.

The external speech services support multiple languages and return text results

for all audio segments.

Afterwards, we combine the results from all segments, assign meaningful timestamps,

add simple punctuation and structuring to the result text.

This is especially interesting if you use our multitrack algorithms:

Then we can send audio segments from the individual, processed track of the

current speaker (not the combined mix of all speakers). Hence we get a much

better speaker separation, which is very helpful if multiple speakers are

active at the same time or if you use background music/sounds.

In addition we can automatically assign speaker names to all transcribed audio segments

to know exactly who is speaking what and at which time.

To activate speech recognition within Auphonic, you have to connect

your Auphonic account to an external speech recognition service

at the External Services page.

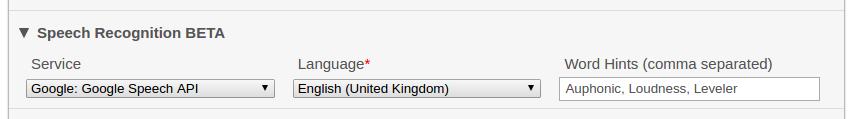

When creating a production or preset, select the speech recognition engine

and set its parameters (language, keywords) in the section Speech Recognition BETA:

Single- and Multitrack Examples (in English and German)

Now let's take a look at a few examples.

As we produce a WebVTT file with speech recognition results, any audio/video player

with WebVTT support can be used to display the transcripts.

Here we will use the open source Podigee Podcast Player,

which supports search in WebVTT transcripts, displays podcast metadata like chapter marks,

is themeable, responsive, embeddable and much more.

In all 3 examples, the Google Cloud Speech API was used as speech recognition engine without any further manual editing of the generated transcripts.

Example 1: English Singletrack

An example from the first 10 minutes of Common Sense 309 by Dan Carlin.

Link to the generated transcript: HTML transcript

Try to navigate within the audio file in the player below, search for Clinton, Trump, etc.:

Example 2: English Multitrack

A multitrack automatic speech recognition transcript example from the first 20 minutes of

TV Eye on Marvel - Luke Cage S1E1.

Link to the generated transcript: HTML transcript

As this is a multitrack production,

the transcript and audio player include exact speaker names as well.

You can also see that the recognition quality drops if multiple speakers are active at the same time – for example at 01:04:

Example 3: German Singletrack

As a reminder that our integrated services are not limited to English speech recognition, we added an additional example in German. All features demonstrated in the previous two examples also work in over 80 languages (although the recognition quality might vary).

Here we use automatic speech recognition to transcribe radio news from

Deutschlandfunk

(Deutschlandfunk Nachrichten vom 11. Oktober 2016, 15:00).

Link to the generated transcript: HTML transcript

As official newsreaders are speaking very structured and clearly, the recognition quality is also very high (try to search for Merkel, Putin, etc.):

If you want to use the Podigee Podcast Player in your own podcast, take a look at our example sites (English Singletrack, English Multitrack, German Singletrack) how to integrate it into an HTML page.

Documentation about the player configuration can be found here and the source code is on Github. In case of any questions, don't hesitate to ask the helpful Podigee team.

UPDATE:

Here is also an example in Portuguese by Practice Portuguese, using a customized version of the podigee player:

Portuguese Example.

Integrated Speech Services: Wit.ai and Google Cloud Speech API

During our Beta phase, we support the following two speech recognition services:

Wit.ai

and the

Google Cloud Speech API.

- Google Cloud Speech API is the speech to text engine developed by Google and supports over 80 languages.

- 60 minutes of audio per month are free, for more see Pricing (about $1.5/h).

- It is possible to add Keywords to improve speech recognition accuracy for specific words

and phrases or to add additional words to the vocabulary.

For details see Word and Phrase Hints.

- Wit.ai, owned by Facebook, provides an online natural language processing platform, which also includes speech recognition.

- It supports many languages, but you have to create a separate Wit.ai service for each language!

- Wit is free, including for commercial use. See FAQ and Terms.

The fact that Wit.ai is free makes it especially interesting.

However, the outstanding feature of the Google Speech API is the possibility to add keywords,

which can improve the recognition quality a lot!

We automatically send words and phrases from your metadata (title, artist, chapters, track names, tags, etc.)

to Google and you can add additional keywords manually (see screenshot above).

This provides a context for the recognizer and allows the recognition of

nonfamous names (e.g the podcast host) or out-of-vocabulary words.

We will add additional speech services if they can provide better quality for some languages, offer a lower price or faster recognition. Please let us know if you need one!

Auphonic Output Formats

Auphonic produces 3 output files from speech recognition results:

an HTML transcript (readable by humans), a JSON or XML file with all data

(readable by machines) and a WebVTT subtitles/captions file as exchange

format between systems.

- Examples: EN Singletrack, EN Multitrack, DE Singletrack

- It contains the transcribed text with timestamps for each new paragraph, mouse hover shows the time for each text segment, speaker names are displayed in case of multitrack, automatically generates sections from chapter marks and includes the audio metadata as well.

- The transcription text can be copied as is into Wordpress or other content management systems, to be able to search within the transcript and find the corresponding timestamps (if you don't have an audio player which supports search in WebVTT/transcripts).

- Examples: EN Singletrack, EN Multitrack, DE Singletrack

- WebVTT is the open specification for subtitles, captions, chapters, etc. The WebVTT file can be added as a track element within the audio/video element, for an introduction see Getting started with the HTML5 track element.

- It is supported by all major browsers and also many other systems use it already (screenreaders, (web) audio players with WebVTT display+search, software libs, etc.).

- It is possible to add other time-based metadata as well: Not only the transcription text, also speaker names, styling or any other custom data like GPS coordinates are possible.

- Search engines could parse WebVTT files in audio/video tags as the format is well defined, then we have searchable audio/video!

- One could link to an external WebVTT file in an RSS feed, then podcast players and other feed-based system could parse the transcript as well (for details see this discussion).

- These possibilities qualify WebVTT as a great exchange format between different systems: audio players, speech recognition systems, human transcriptions, feeds, search engines, CMS, etc.

- Examples: EN Singletrack, EN Multitrack, DE Singletrack

- This file contains all the speech recognition details in JSON or XML format: text, timestamps, confidences values and paragraphs.

Tips to Improve Speech Recognition Accuracy

Audio quality is important:- reverberant audio is very difficult, put the microphone as close to the speaker as possible

- try to avoid background sounds and noises during recording

- no bad quality skype/hangout connections

- don't use background music (unless you use our multitrack version)

- pronunciation and grammar is important

- dialects are more difficult to understand, use the correct language variant if available (e.g. English-UK vs. English-US)

- don't interrupt other speakers (this makes a big difference!)

- don't mix languages

- this is a big help for the Google Speech API

- when using metadata and keywords, it's possible to recognize special names, terms and out-of-vocabulary words

- as always, correct metadata is important!

- if you record a separate track for each speaker, use our multitrack speech recognition

- this will result in better results and exact speaker timing information

- background music or sounds can be put into a separate track, to not confuse the recognizer

Public Beta

Our speech recognition system is still in (public) beta.

Although it should work quite stable now,

be aware that there might be problems which cannot be fixed in a very short time.

Also some formats or data structures could be changed, added or removed.

The speech recognition is also integrated in our API, so that one can automate the whole workflow or process audio collections (please let us know if you process big collections!).

We would also like to encourage developers from other projects

(transcriptions, audio editors, podcast hosters, audio players, podcatchers, search engines, ...)

to build on top of a common exchange format like WebVTT.

Then multiple systems can work together and make searchable audio a reality!

VIDEO:

If you understand German, take a look at the following video from the

SUBSCRIBE 8

conference:

Spracherkennung für Podcasts